As we all know, Artificial Intelligence (AI) has gained massive attention over the last couple of years, starting with the launch of ChatGPT by OpenAI on November 30, 2022, and its viral adoption reaching 1 million users in just five days. This was followed by new services based on Large Language Models (LLMs) like Claude (Anthropic), Grock (X), Gemini (Google), and LeChat (Mistral). Additionally, a significant amount of venture capital has been invested in AI-related companies. The media coverage, including TV, radio, and newspapers, has further amplified this attention.

However, I’ve noticed a lack of a clear framework to help everyone understand what is possible with LLMs and other types of AI, as well as their limitations. Many people use “AI” to refer to a variety of topics without clarifying the differences. On one hand, having a single term is useful for selling solutions to non-technical clients or attracting venture capital for AI startups. On the other hand, it can lead to problems when managing stakeholders’ or clients’ expectations. For example, an article published by IBM in February 2024, titled “The Most Valuable AI Use Cases for Business,” defines AI’s use cases as delivering superior customer service, personalizing customer experiences, promoting cross- and up-selling, speeding up operations with AIOps, predictive maintenance, and demand forecasting. For non-technical users, AI might seem like a panacea, often associated with services like ChatGPT or Gemini. But the truth is, LLMs like ChatGPT won’t solve all their problems.

Therefore, my intention in this article is to provide a simple map to understand the different flavors of Artificial Intelligence, including where LLMs fit within this map. I’ll also conclude with what I see as promising for the future and where it makes sense to invest your time as a Data/Applied Scientist and your money from a company perspective.

Before diving into the framework, let’s clarify what LLMs are. Having this clear will help us better understand the different categories in the framework.

LLMs in a nutshell

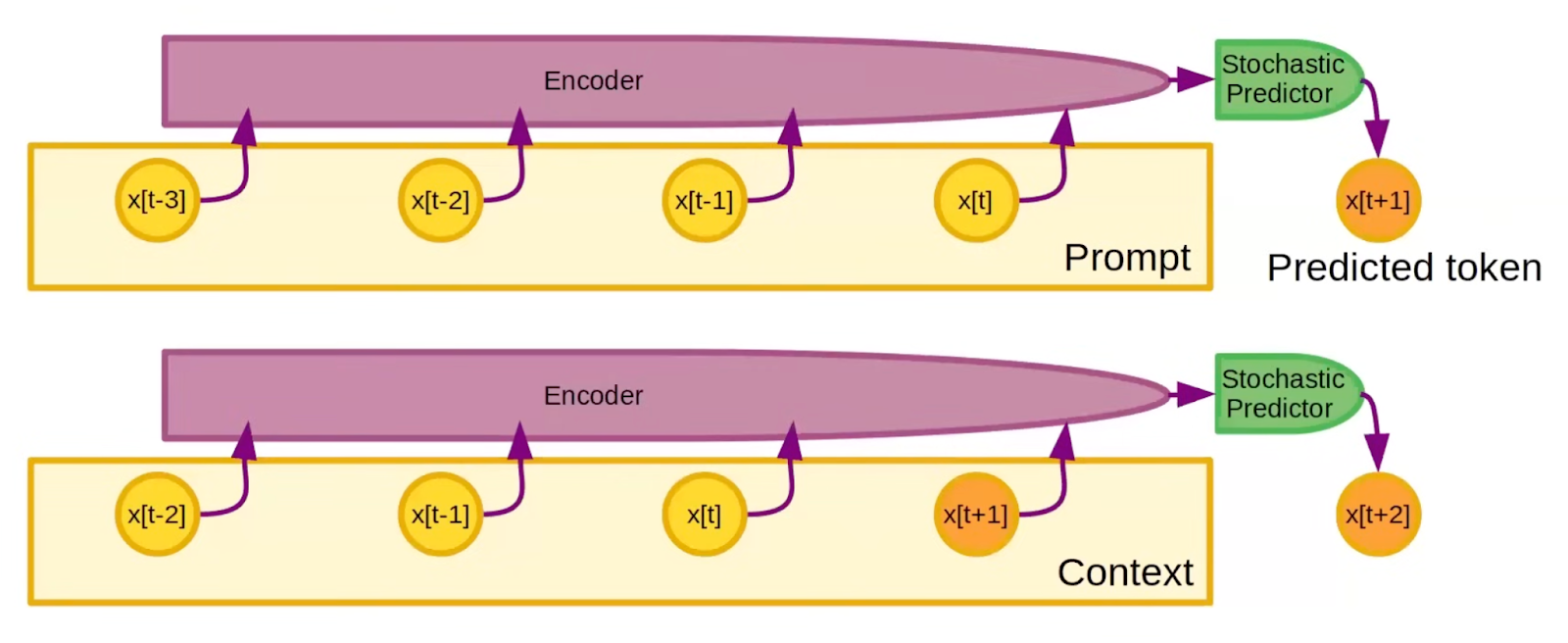

LLMs, or “Auto-regressive Large Language Models,” operate by predicting the next word in a sequence based on the context of the preceding words. These models are trained on vast amounts of text data, learning the statistical properties of language during training. This enables them to generate coherent and contextually relevant text. The autoregressive nature means the model generates text one word at a time, using each newly generated word as part of the context for predicting the next word. This approach allows the model to produce fluent and contextually appropriate text, making it useful for various natural language processing tasks.

To achieve better performance, LLMs undergo several tuning processes. Fine-tuning involves training the pre-trained model on specific datasets relevant to desired tasks or domains, improving accuracy in specialized areas. Prompt engineering optimizes input prompts to guide the model toward generating desired outputs, enhancing its effectiveness. Reinforcement Learning from Human Feedback (RLHF) incorporates human feedback into the training process, refining the model’s outputs based on human preferences and expectations. These tuning techniques enable LLMs to deliver more accurate, context-aware, and useful responses across various applications.

The Two-Dimension framework

Scientific literature offers multiple definitions of (Artificial) Intelligence, but these can be categorized into two dimensions. On one side, we have those related to thought processes and behavior (Think vs. Act). On the other side, we have those related to performance, i.e., how close the intelligence is to human performance or how close it is to ideal performance (Human vs. Rational). These two dimensions allow us to define four categories:

Acting Humanly: The Turing Test approach

Thinking Humanly: The cognitive modeling approach

Thinking Rationally: The “laws of thought” approach

Acting Rationally: The rational agent approach

Stuart Russell and Peter Norvig propose these four categories in their book Artificial Intelligence: A Modern Approach. Let’s deep-dive into each of them.

Note: This categorization is not based on the underlying scientific techniques or approaches (e.g., linear regression, neural networks, reinforcement learning, etc.) but rather on their use cases, underlying assumptions, and limitations. For example, neural networks might be used as building blocks for each type of intelligence in this framework.

Acting Humanly: “The Turing Test approach”

“A computer passes the test if a human interrogator, after posing some written questions, cannot tell whether the written responses come from a person or from a computer. “

-- Rusell & Norvig (2021)

For a machine to be described as acting humanly, it would need to understand and generate natural language, have a knowledge representation system to store what it knows and new information, draw new conclusions based on context (automated reasoning), and adapt to new circumstances while detecting and extrapolating patterns (machine learning).

That said, it’s impossible to define a perfect Artificial Intelligence that acts humanly because there’s no definition of perfect human intelligence. We differentiate good from bad using relative comparisons. For example, a drawing from a 10-year-old child could be considered good or bad based on our subjective expectations. Similarly, an intelligent machine could provide good or bad answers, drawings, or songs based on our subjective expectations.

The plain versions of the previously mentioned LLM-based services can fall within the definition of Acting Humanly. Beyond ChatGPT, Gemini, and LeChat, other services like video generation (e.g., OpenAI’s Sora), coding assistants (e.g., GitHub Copilot or Tabnine), song generation tools/services (e.g., Audiocraft by Meta or Mubert), and image generation tools (e.g., DALL·E 3 or MidJourney) also fit into this category. It’s worth highlighting that none of these services have a clear objective or goal beyond providing a good quality output (measured by human standards).

Thinking Humanly: The cognitive modeling approach

“Cognitive science is the study of the human mind and brain, focusing on how the mind represents and manipulates knowledge and how mental representations and processes are realized in the brain”

-- Cognitive Science | Johns Hopkins University

Studying intelligence from a cognitive science perspective provides insights into human behaviour, decision-making, and acquiring knowledge through thought, experience, and senses (known as cognition). These insights allow us to build cognitive models and theories around how the mind works and translate those theories into computer programs. These programs can then perform tasks and be compared against human behaviour.

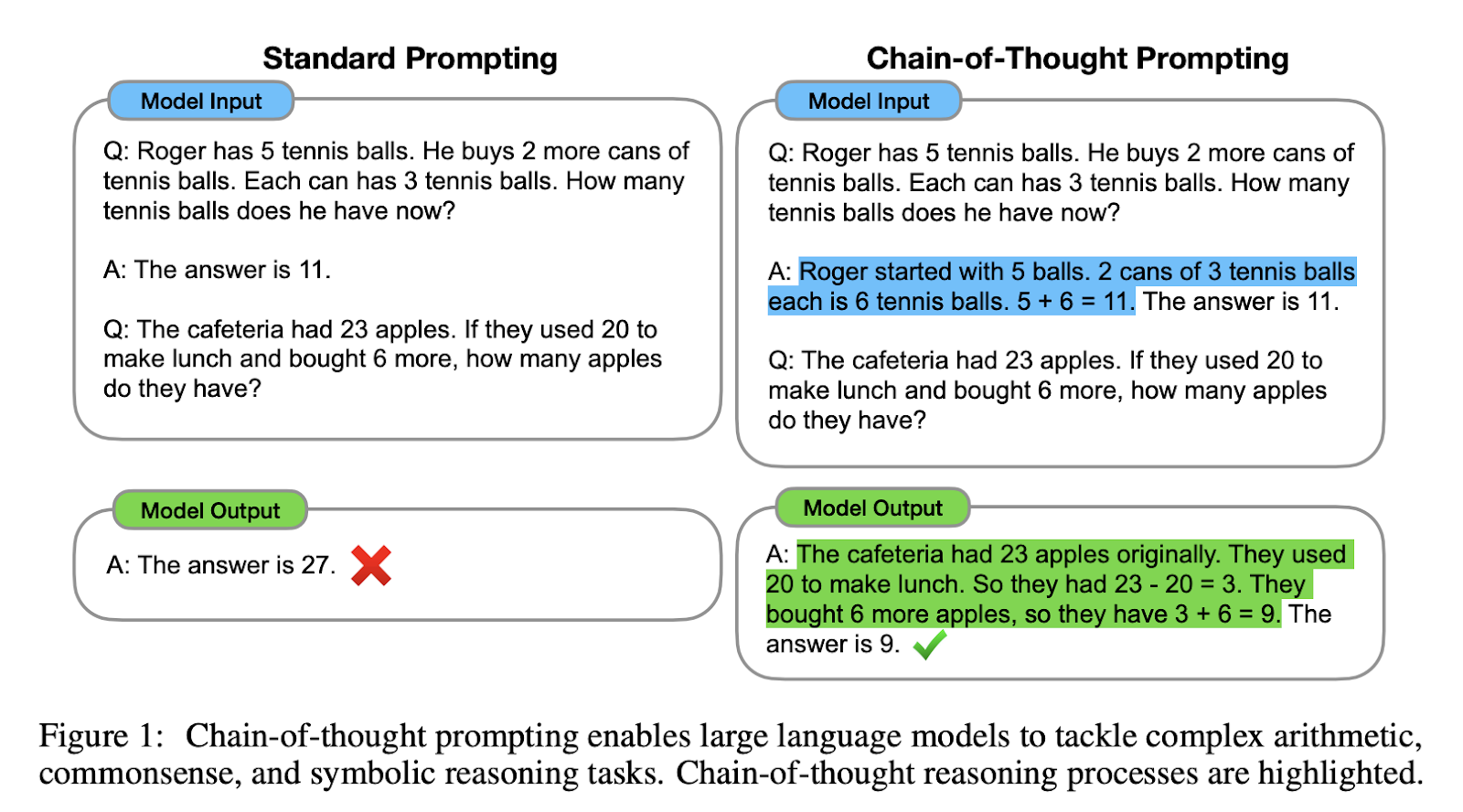

One recent model that has captured the attention of researchers aiming to enhance LLMs is known as “Chain-of-Thought” (CoT) by Google Researchers. The main idea is to use a simple reasoning approach to help LLMs reach correct conclusions without needing additional rational-augmented training or finetuning. In short, “a chain-of-thought is a series of intermediate natural language reasoning steps that lead to the final output.” The following image shows an example taken from the original paper.

Moreover, benchmarks for LLM-based systems often use Chain-of-Thought (CoT) in their evaluation criteria. For example, the official Llama3 Benchmark compares Llama3, Gemma, and Mistral using CoT in math word problems (GSM-8K) and arithmetic reasoning (MATH) test beds. Beyond CoT, other approaches include “Tree-of-Thoughts”(paper) or multi-agent communication (paper).

Examples of enhanced LLMs include LangChain, AutoGPT and MetaGPT. These systems aim to address broad categories of challenges and use cases that require reasoning. They might define steps to address specific tasks and, in some cases, re-evaluate those steps as new information is gathered or thoughts are processed.

Additionally, you might have noticed that the latest versions of ChatGPT, LeChat, and Gemini include some simple cognitive models to improve the (subjective) quality of their answers. However, these additional cognitive models need to be simple to maintain fast response times.

Thinking Rationally: The “laws of thought” approach

“A system is rational if it does the ‘right thing’, given what it knows.”

-- Rusell & Norvig (2021)

Some authors have focused on studying intelligence from a logicist point of view (logic). Their goal is to provide an irrefutable reasoning process, often using deductive reasoning to draw conclusions (syllogisms). For instance, if Socrates is a man and all men are mortals, then we can conclude Socrates is mortal. However, conventional logic has limitations and assumptions, including certainty on all assertions, which is rarely achieved in most use cases. Thus, probability and statistics emerged as tools to support reasoning under uncertainty. E. T. Jaynes discussed probability as an extension of logic in his posthumous book Probability Theory: The Logic of Science (2003). Recent developments in this category have focused on causal inference and counterfactual reasoning.

Significant advancements in this type of machine intelligence were made over 60 years ago. For instance, in 1972, Prolog was introduced as one of the first logic programming languages, rooted in first-order logic and formal logic. In Prolog, a program is a set of facts and rules defining relationships. On the probabilistic side, probabilistic programming languages (PPLs) like WinBUGS, Stan, PyMC emerged to leverage computational power and resources for generating inferences. These tools allow machines to achieve rational (probabilistic) conclusions, but they often require a set of assumptions about the world.

Unfortunately for some, LLMs do not fall within this intelligence category. LLMs are predictive machines that do not draw conclusions; instead, they predict the most statistically likely next word. They understand the underlying distribution of words and phrases but lack a notion of truth or facts. Enhancing LLMs with cognitive models might make them seem more “rational,” but they are still limited by the structure of their training data and the quality of the cognitive model.

Acting Rationally: The rational agent approach

“A rational agent is the one that acts so as to achieve the best outcome or, when there is uncertainty, the best expected outcome”

-- Rusell & Norvig (2021)

Thinking rationally allows a machine to understand how the world works and make correct inferences about the future, but this alone is not enough to generate intelligent actions. An ideal rational agent should operate autonomously, perceive the environment, adapt to changes, create and pursue goals, and ultimately achieve the best outcome.

It’s important to clarify that there are multiple approaches to creating rational agents. One approach involves acting humanly, where knowledge representation and reasoning enable an agent to make good decisions (good ≤ best). Another approach involves thinking rationally, where an agent makes the best-expected decision based on available information. These are just two possible paths to achieving rational behavior based on previous definitions of artificial intelligence.

A third approach involves developing agents that focus on making optimal decisions by evaluating the potential outcomes of their actions, rather than predicting each element required for decision-making. For example, consider an Amazon warehouse. The system controlling inventory levels doesn’t need to know the exact future demand to make effective reordering decisions. Instead, the agent might learn that low inventory on a Wednesday negatively impacts profits. Therefore, it can order the correct quantity by considering the unit cost of inventory and the estimated marginal profit for each additional unit, without explicitly predicting demand.

Building rational agents has been the focus of multiple disciplines, including computer science, engineering & operations research. Various techniques from these fields address rational decision-making challenges. These techniques can be divided into deterministic and stochastic. Deterministic techniques include Constraint Programming, Mathematical Programming, Metaheuristics (e.g., simulated annealing, tabu search, genetic algorithms), and tailor-made heuristics. Stochastic approaches include Markov decision processes, reinforcement learning, optimal control, simulation-optimization, stochastic programming, and approximate dynamic programming, among others.

So, how do LLMs fit within the rational agent framework? LLMs could be a component in developing rational agents. As mentioned before, LLMs have a knowledge representation system capable of storing new information. However, it is unclear if they can perform causal reasoning instead of merely predicting the next words. If you are interested in current research in this direction, check out The Battle of Giants: Causal AI vs NLP.

The future ahead

“We’re easily fooled into thinking they (LLMs) are intelligent because of their fluency with language, but really, their understanding of reality is very superficial.

(…) They’re useful, there’s no question about that. But on the path towards human-level intelligence, an LLM is basically an off-ramp, a distraction, a dead end.” – Yann LeCun - VP & Chief AI Scientist @ Meta (2024)

LLMs seemed to appear almost unexpectedly, and their virality helped build much of the current hype around AI. However, only a few people truly understand their limitations, and thus their real value and potential. In the coming years, we will likely see improvements in LLM-based systems, many of which will stem from adding reasoning capabilities by combining them with cognitive models based on human cognition. Despite these advancements, I align with Yann LeCun (#teamLecun) in believing that LLMs are a dead-end for achieving more-than-human-level intelligence. The core issue is that these predictive models struggle to build or deduce a world model—defining a set of facts or rules about the world—and then act towards a specific goal. While they will undoubtedly become more useful, we should not invest all our time, resources, and money into this single approach.

These concluding paragraphs aim to open doors and invite exploration into two new realms. The first is Causal Inference (or Causal ML), as enabling machines to think rationally would have a massive impact on making smarter decisions. For instance, imagine being able to estimate how demand for a product is affected by summer promotions while considering competitors’ prices and the uncertainty in your estimation. The second realm is Sequential Decision Making, as most real-life problems follow this pattern (i.e., action, information, action, information, etc.). The ultimate goal is to build systems that act rationally in any situation, capable of exploring, adapting, learning, and deciding at the right time. If you are interested, check the Reference section for links to some introductory material.

For companies and organizations, I suggest starting to explore, research, and invest in AI that acts rationally, while also providing robust LLM-based systems to enhance your workforce. Companies should not rely on artificial human-level intelligence for decision-making. Instead, they should seek systems that make optimal, rational decisions to maximize business metrics within specific constraints. Conversely, I advise against spending significant resources on developing custom LLM-based systems. Many researchers and applied scientists are already working on this, and LLM-based systems are expected to become commodities. In a few years, high-quality solutions will be readily available at competitive prices.

In conclusion, although Large Language Models (LLMs) boast remarkable capabilities, our strategic focus should shift toward developing AI systems capable of making rational, informed decisions. Such a shift will drive significant advancements and have a profound impact not only in the corporate sector but also within non-profit organizations and governmental bodies, ensuring that our efforts and investments contribute positively across all facets of society.

I hope you enjoyed and found this blog post useful. If you would like to connect, you can find me on Twitter (“X”) as @_mabolivar or on Linkedin. I’ll be happy to chat.

-–

A big thank you to Biagio Antonelli, Ezequiel Smucler, Sérgio Ribeiro, and Juan David Bolívar. Your feedback was incredibly valuable, even when we disagreed, and it helped shape this article for the better.

References

Here are a few useful and interesting references:

[General]

[Video] Yann Lecun | Objective-Driven AI: Towards AI systems that can learn, remember, reason, and plan

[Video] The Battle of Giants: Causal AI vs NLP by Aleksander Molak

Generative AI Sucks: Meta’s Chief AI Scientist Calls For A Shift To Objective-Driven AI @ Forbes

5 Best AI Music Generators of 2024 (I Tested Them All) @ Medium

[Causal Inference]

[Book] Causal Inference and Discovery in Python by Aleksander Molak

[Book] Causal Inference for The Brave and True by Matheus Facure

[Sequential Decision Making]

[Video] Sequential Decision Analytics by Warren Powell

[Book] Sequential Decision Analytics and Modeling by Warren Powell

[Book] Artificial Intelligence: A Modern Approach by Rusell & Norvig

[Book] Reinforcement Learning: An Introduction by Sutton & Barto